- Audio Article

- Disinformation — The Silent Enemy of Peace

- What Disinformation Is—and Isn’t

- Why Lies Travel Faster Than Corrections

- The Mechanics: How Disinformation Works in the Wild

- The Old Toolkit with New Batteries

- How Disinformation Fuels Conflict

- The Cost to Peace Even After the Shooting Stops

- The Psychology That Makes Us Vulnerable

- The Infrastructure That Helps Lies Win

- How Societies Can Fight Back—Without Becoming What They Fear

- Personal Practices That Scale

- Hope, Sober and Stubborn

- MagTalk Discussion

- Focus on Language

- Vocabulary Quiz

- The Debate

- Let’s Discuss

- Learn with AI

- Let’s Play & Learn

Audio Article

Disinformation — The Silent Enemy of Peace

Bombs are honest about what they do. They arrive with noise, heat, and debris, leaving no mystery about their intentions. Lies work differently. They prefer candlelight and closed doors; they whisper, they flatter, they entertain. And while bombs can flatten a neighborhood in a second, lies can corrode a society for a generation. Peace, which seems like a matter of borders and treaties, actually rests on something more delicate: trust—our belief that the words we trade are mostly faithful to reality, that institutions misstep but do not fabricate, that neighbors argue but do not invent. When disinformation takes root, trust becomes a rumor, and peace loses its foundation.

What Disinformation Is—and Isn’t

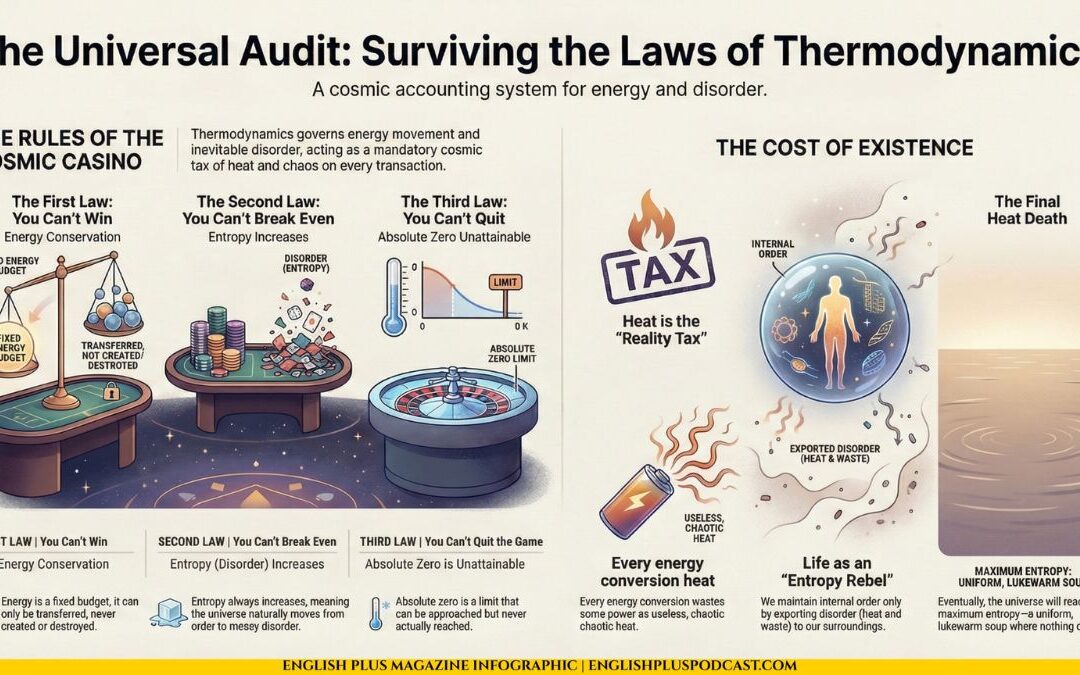

Let’s set our terms. Misinformation is wrong information passed along without malice; your uncle misreads a chart and shares it. Disinformation is a planned campaign to mislead; someone crafts a false narrative and amplifies it for advantage. Propaganda can be truthful, half-true, or false; its defining feature is not accuracy but intent—to persuade by controlling the frame, the feelings, and often the facts. Fake news isn’t just shabby reporting; it’s a genre of manufactured content posing as journalism, dressed in the fonts and tones of credibility. All of these, when deployed systematically, are social acid: they dissolve our ability to agree on what happened, to decide what to do, and to accept a loss without believing the game was rigged.

Why Lies Travel Faster Than Corrections

A falsehood is easier to package than a truth because it has no friction. The truth is constrained by reality; a lie can be tailor-made for our appetites. It flatters identity, confirms suspicion, and delivers certainty where the world insists on ambiguity. Add to that our cognitive wiring—confirmation bias, in-group loyalty, the thrill of novelty—and you have a perfect conveyor belt. Platforms do the rest: engagement-first algorithms boost the content that provokes, not the content that hesitates. Outrage, unlike nuance, comes pre-sharpened.

The Mechanics: How Disinformation Works in the Wild

The modern campaign often starts with an anchor—an image, a headline, a statistic—placed in a small but influential corner of the internet. Bots and paid networks amplify it, creating the illusion of consensus. Memes give it a joke’s clothing; screenshots detach it from context; miscaptioned videos graft it onto new events. A swarm of accounts then performs the second act: flood the zone. If one lie is debunked, three more appear, not to convince the skeptic but to exhaust the curious. The final act is reputational arson: anyone asking for evidence is labeled biased, corrupt, or part of a shadowy “they.” The point isn’t to win an argument; it’s to make the argument feel impossible.

The Old Toolkit with New Batteries

None of this is truly new. States and factions have always used rumor and theater to prepare the ground for conflict or to sanitize it after the fact. What has changed is velocity and intimacy. A pamphlet once took days to travel; a post now arrives in your palm before you finish a yawn. The message no longer addresses a crowd in a square; it speaks through your cousin’s account, your favorite gamer’s channel, or a parenting forum at 2 a.m. Disinformation doesn’t knock on the front door of public discourse; it slips into the group chat.

How Disinformation Fuels Conflict

Start with sorting: lies divide populations into camps that don’t simply disagree; they don’t share a reality. Then escalate: each camp receives curated horror stories about the other, the kind that turn political opponents into existential threats. Next comes justification: violence becomes preventive, repression becomes protection, and rights become loopholes for enemies to exploit. Finally, normalization: once distortions rule the narrative, those who resist are “extremists,” and those who abuse are “guardians.” No artillery required—just enough distortions to convince each side that the other side forfeited their claim to citizenship.

The Cost to Peace Even After the Shooting Stops

Suppose the guns go quiet. Disinformation lingers like smoke in drapes. Post-conflict reconstruction demands a shared picture of the past, however contested; lies make even basic timelines contentious. Courts struggle because witnesses consume different worlds. Elections become referendums on imaginary plots. Aid delivery is sabotaged by rumors about vaccines, food supplies, or evacuation lists. The war ends on paper; it continues in the comment section.

The Psychology That Makes Us Vulnerable

We are not fools; we are human. We prefer coherent stories to messy ones; we defend our identity with editorial vigor; we trust people who sound like us and distrust those who don’t. We are also tired—information-fatigued, time-poor, and chronically distracted. Disinformation campaigns exploit this: they supply frictionless answers, draped in identity signals, delivered during our weakest hours. The result is not gullibility but triage: we accept the quick, flattering version so we can move on with our day. Multiply that by millions of days, and the public sphere starts speaking a language reality doesn’t recognize.

The Infrastructure That Helps Lies Win

This is not only about psychology; it’s about plumbing. When recommendation engines are paid in attention, they buy more of it by promoting content that spikes emotion. When news deserts expand, conspiracies move in like opportunistic weeds. When education emphasizes testable facts but neglects epistemology—how we know what we know—graduates can parse a poem but not a pie chart. When independent media is starved and public media is stigmatized, the loudest voice sets the floor, not the most rigorous one. That is how the invisible war advances: through defaults, design choices, and budget lines.

How Societies Can Fight Back—Without Becoming What They Fear

The antidote to disinformation cannot be mass censorship; that’s a cure worse than the disease. The goal is resilience, not purity. It begins with transparency—platforms that label, throttle, and archive repeated falsehoods; political parties that publish ad libraries; institutions that show their work. It includes inoculation: prebunking that warns people about tactics before they encounter them, and media-literacy education that treats every student as a future editor. It requires pluralism: a media ecosystem where fact-checkers can disagree on emphasis but not fabricate. And it needs civic habits: communities that hold town halls, not just feeds; leaders who admit uncertainty and change course in public without calling it defeat.

Personal Practices That Scale

There are quietly radical things individuals can do. Slow down before sharing. Ask for the source, not out of smugness but curiosity. Diversify your inputs; follow at least one credible source that challenges your priors. Learn the anatomy of a misleading chart, and keep a short list of sites you cross-check when the stakes are high. Most of all, narrate your process aloud to family and friends: “I wanted to believe this, but I checked, and it didn’t hold up.” That sentence is a civic service; it models how adults change their minds.

Hope, Sober and Stubborn

Disinformation feels unbeatable because it is tireless; it doesn’t need sleep or shame. But truth has allies it often forgets to call: patience, verification, local trust, and the boring virtues of bureaucracy done well. Peace doesn’t demand universal agreement; it demands enough shared reality to argue productively. If we can rebuild that—classroom by classroom, feed by feed—the silent enemy loses its advantage. The noise will continue, but the signal will carry farther.

MagTalk Discussion

Focus on Language

Vocabulary and Speaking

Let’s learn from the language of this piece—words you can bring into daily conversations because they help you ask better questions and set clearer boundaries. Start with “hold up,” which we used to test claims: “Does this story hold up?” It means withstand scrutiny. You can apply it everywhere: diet plans, sales forecasts, gossip. When you say it, you’re not attacking; you’re inviting proof. Pair it with “source,” as in, “What’s the source?” That little question is not a courtroom hammer; it’s a handrail. It keeps you steady when a claim tries to sprint past your judgment.

“Frame” is a quiet workhorse. In disinformation, the frame decides what counts as relevant. In a team meeting, the frame can turn a failure into an experiment. In families, the frame can shrink blame and expand responsibility: “Let’s frame this as a scheduling problem, not a character flaw.” Changing the frame doesn’t erase facts; it rearranges their furniture so you can move through them without tripping.

“Flood the zone” describes an overwhelming tactic: release so much content that truth drowns in the volume. You can borrow it to describe life: the calendar flooded the zone this week; your inbox flooded the zone after a product launch. Naming the flood gives you permission to build a dam: delay replies, set expectations, focus on one channel.

“Identity cues” are signals that say who the message is “for.” They can be a logo, a dialect, a celebrity. In everyday life, identity cues help you choose tone: you text a colleague differently than a friend. In media consumption, noticing identity cues helps you ask whether a message is courting your loyalty more than your reason.

“Prebunk” is the cousin of debunk; it means anticipating a false claim and describing the trick before it arrives. Parents prebunk: “Someone might tell you this toy cures boredom; it doesn’t.” Managers prebunk: “You’ll see posts claiming we’re shutting down; here’s how to spot them.” It’s not pessimism; it’s preparation.

“Bad faith” is a valuable label, not to weaponize but to protect your energy. It describes a conversation where the other party isn’t seeking truth but points. If you suspect bad faith, narrow the scope: “Let’s check one claim.” Or set a boundary: “If we can’t agree on standards of evidence, we should pause.” In practice, calling the posture “bad faith” helps you decide whether to continue or conserve.

“Source triangle” is a helpful mental model: primary (the data or event), secondary (analysis by experts), tertiary (summaries and textbooks). In daily life, aim to touch at least the secondary level before you form a strong opinion. For health claims, peek at the primary: the study itself. You don’t have to be a scientist to read the abstract and ask basic questions about sample size or controls.

“Walk back” appears again because it’s the social lubricant of intellectual honesty. When you walk back a claim, you’re not surrendering; you’re editing. Use it publicly: “I want to walk back what I said about that poll; I overstated the margin.” That tone keeps relationships and reputations intact.

“Signal-to-noise” gives you a graceful way to say, “We’re not learning anything.” At work, you might say, “This thread has a low signal-to-noise ratio—can we switch to a short call with the data on screen?” At home, “Our late-night debates have become noise—let’s talk after coffee.” It’s frank without being cruel.

Now, let’s build these into your speaking. Imagine delivering a short update to a community group worried about a viral rumor. You could start with tone and tools: “Before we react, let’s check whether the claim holds up and look at the source triangle.” You move the room from panic to process. Then you sketch the tactics: “The rumor’s account flooded the zone yesterday with identity cues meant for us. Prebunking this now will save us from a week of whack-a-mole.” You’ve named the move; now it’s less spooky. If someone challenges you with heat but little evidence, you keep the door open without inviting chaos: “If we can agree on standards of evidence, I’m happy to continue; otherwise, we’re burning time.” You close by preserving dignity: “I’m going to walk back something I said at last month’s meeting about the budget; new numbers changed the picture.” That generosity to your past self gives permission for everyone else to update, too.

Your speaking workout: record a ninety-second voice note about a trending claim. Use at least eight of the phrases above—hold up, source, frame, flood the zone, identity cues, prebunk, bad faith, source triangle, walk back, signal-to-noise. Start with your process (“I checked the primary source…”), narrate your decision to slow down, and end with a practical next step (“We’ll prebunk similar posts next week by sharing three questions to ask before you share”). Then listen back for pacing. Trim filler. Add one metaphor—just one—that clarifies, not decorates. Repeat the exercise with a friend playing a skeptical audience; practice keeping your voice calm while your argument stays firm. That’s rhetorical resilience.

Grammar and Writing

Writing challenge: craft an 800–1,000-word op-ed titled “Trust Is Infrastructure.” Your thesis is simple and hard: disinformation isn’t just a nuisance of the internet age; it’s a structural threat to peace because it breaks the civic tools we use to disagree productively. You’ll argue for three countermeasures—transparency by default, prebunking in schools and workplaces, and pluralistic media ecosystems—and you’ll show how each reduces the temperature without dampening free expression.

Blueprint:

Open with a scene. “By lunchtime, the rumor that the clinic was injecting microchips had emptied the waiting room. The nurse who’d delivered half the town’s babies sat with her hands in her lap, as if she’d misplaced the instrument she used most: trust.” This opening animates stakes without scolding. Then pivot to your thesis with a concessive clause: “Although free speech can tolerate noise, peace cannot survive when lies monopolize attention.” Concessives (“although,” “even though”) add maturity to your stance: you hold two truths at once.

Paragraph structure: use the “claim–because–so” loop to prevent drift. “Transparency counters disinformation because it starves rumor of mystery; so public dashboards of spending and policy drafts are not luxuries but guardrails.” Parallelism will make your argument march: “We need platforms that label, schools that prebunk, and leaders who walk back.” Parallel triads stick in memory; they’re rhythm turned into logic.

Grammar moves:

Modulated modality. Modals like “must,” “should,” and “can” signal strength; pair them with hedges for credibility: “We should require ad libraries, and we can do it without chilling debate.” The hedge marks humility; the modal marks urgency.

Appositives to compress evidence. “Prebunking, a strategy that teaches people to spot manipulative tactics before exposure, improves skepticism without cynicism.” The appositive (“a strategy…”) prevents a separate sentence and sustains momentum.

Relative clauses for specificity. “Rumors that thrive in information deserts—regions where local reporting has withered—spread faster because there’s nothing to contest them.” The clause makes your generalization testable.

Balanced coordination. Use semicolons to stitch related claims of equal weight: “Censorship breeds martyrs; transparency breeds boredom.” That second clause is cheeky and tight—it turns a principle into a punchline.

Tense control for authority. Present tense for general truths (“Disinformation corrodes institutions”), past tense for examples (“A fake photo last winter tanked clinic attendance”). One paragraph in present perfect (“has been rising”) can mark trends.

Cohesion with demonstratives. “This erosion,” “that reflex,” “these tactics” act like thread. Each “this” points backward while pulling the reader forward.

Editing checklist:

- Remove the performative moralizing. Replace “people are stupid” energy with “people are busy” empathy.

- Verify every number twice. If you’re not sure, swap it for a qualitative description or cite a range.

- Vary sentence length. If you cannot read one sentence aloud without a breath, split it.

- End with an actionable paragraph. “Ad libraries, prebunking modules, and civic dashboards are not glamorous. They are the dish soap of democracy—unglamorous, indispensable, and best used daily.”

Writing technique clinic:

- Use negative capability sparingly. Admit uncertainty where it exists: “It’s difficult to measure the exact impact of a single rumor, but we can measure clinic traffic before and after.” Precision earns trust.

- Practice metaphor discipline. One sustained metaphor—trust as infrastructure—is enough. Resist adding a forest, a ship, and a chessboard.

- Build ethical language around opponents. Argue with ideas, not with caricatures. If you model fairness to those you think are wrong, you protect your piece from being used as a cudgel.

As you draft, imagine the skeptical but persuadable reader: someone who dislikes disinformation but fears overreach. Address that fear explicitly: “Safeguards must be content-agnostic and process-specific: we regulate the shape of the pipe, not the flavor of the water.” That sentence, with its tidy chiasmus, shows you’ve thought about liberty as carefully as you’ve thought about harm.

Finally, read the op-ed aloud to someone who doesn’t track policy. If their eyes glaze when you say “epistemic,” swap it for “how we know what’s true.” If they say, “I don’t know what to do after reading this,” add one paragraph of practical steps for individuals and one for institutions. The piece should end with posture and plan.

Vocabulary Quiz

The Debate

Let’s Discuss

- What is the line between persuasion and manipulation in political communication? Explore intent, transparency, and whether the audience has access to counterarguments.

- Should platforms be required to downgrade repeat falsehoods, and if so, who decides the standard of falsity? Consider independent audits, public archives, and appeal processes.

- How can communities prebunk effectively without sounding condescending? Think about using local messengers, storytelling, and practical checklists instead of lectures.

- What responsibilities do schools and employers have in teaching verification skills? Map what a ten-minute weekly drill could look like and how to measure improvement.

- When trust is broken, what repairs it faster: apologies, transparency, or time? Share examples from institutions that regained credibility—and those that never did—and why.

Learn with AI

Disclaimer:

Because we believe in the importance of using AI and all other technological advances in our learning journey, we have decided to add a section called Learn with AI to add yet another perspective to our learning and see if we can learn a thing or two from AI. We mainly use Open AI, but sometimes we try other models as well. We asked AI to read what we said so far about this topic and tell us, as an expert, about other things or perspectives we might have missed and this is what we got in response.

Let’s talk about three blind spots. First, supply-side saturation. We obsess over demand—why people believe—but we under-invest in the pipe that carries lies. A handful of monetized pages, ad networks with low standards, and a cottage industry of engagement farms create an always-on spigot. Turning the pressure down doesn’t require speech police; it requires procurement hygiene. Advertisers can refuse to bankroll known manipulators; payment processors can enforce basic disclosure; platforms can rate outlets on process transparency the way restaurants get health grades. No ideology required—just plumbing.

Second, midstream moderators. We rely on underpaid, undertrained moderators to clean the Augean stables of the internet. Burnout is guaranteed, inconsistency inevitable. Professionalizing this layer—clear guidelines, mental-health support, rotating duty cycles, and appealable decisions logged in public ledgers—doesn’t solve everything, but it turns ad hoc cleanup into a civic service. Imagine telling citizens, “If we remove your post, here’s the exact clause, the timestamp, and a link to challenge.” That is dignity, not deletion.

Third, local credibility engines. National media fights national fires, but rumors ignite locally. A trusted librarian, a clinic director, a farmers’ cooperative chair—these are credibility engines. Fund them. Give them template prebunks, office hours, and a WhatsApp broadcast list. When a rumor appears, the correction should arrive with the same accent and at the same speed. It’s astonishing how many crises shrink when the right person says, “I checked.”

Two closing ideas. Audit your information diet like you’d audit your pantry. Keep staples (primary sources, reputable analysis), treats (opinion you enjoy but verify), and a trash can (accounts you unfollow when they repeatedly fail the hold-up test). And narrate your corrections. The most powerful sentence in a disinformation age might be: “I changed my mind because I learned something.” That’s not a confession; that’s a culture.

Let’s Play & Learn

Learning Quiz: Can You Spot Disinformation in 20 Real-Sounding Headlines?

Disinformation doesn’t always look like a wild conspiracy theory. Often, it sounds reasonable—just confident enough to slip past your defenses. This quiz gives you realistic, fictional news excerpts and asks you to classify each as reliable, misleading, or fake. You’ll get hints to guide your thinking and rich feedback that explains not only the correct choice but the reasoning behind it: source credibility, evidence, context, logical fallacies, image/video authenticity cues, and language signals like hedging or sensationalism. By the end, you’ll read headlines more carefully, spot red flags faster, and communicate your analysis clearly in English—skills you can use in school, work, and daily life.

Learning Quiz Takeaways

If disinformation were always loud and ridiculous, we’d all be experts at spotting it. The trouble is that much of it arrives wearing a sensible jacket: a precise percentage, a cropped chart, a confident headline, a familiar URL that’s just one letter off. The point of this lesson is to turn your casual suspicion into a repeatable method—a way to move from “I have a bad feeling about this” to “Here’s exactly why this is unreliable, misleading, or fake,” and to express that reasoning clearly in English.

Start with source and traceability. Reliable reports tell you who, when, and how. They link to primary materials—laws, datasets, full speeches, studies—and they name institutions, authors, and methods. In the quiz, the city air-quality report (Question 5) provided a model: named document, link, methodology, and raw data. This transparency lets you verify claims and quote responsibly. A useful habit: when you see a statistic, ask, “Can I click through to the study, and does the study describe its sample, method, and limitations?” If the trail ends in vague phrases like “experts say” or “a recent study,” your confidence should drop.

Next, consider proportionality and presentation. Numbers do not speak for themselves; visuals shape how we hear them. Question 6 showcased a familiar trick: a truncated y-axis that makes modest changes look dramatic. Your move is to reframe the claim. Instead of repeating “Crime down 50%,” describe what the full-scale chart would show and ask for per-capita or long-term trends. This reframing is more than pedantic—it’s persuasive clarity, the kind that improves your own writing and helps your audience reason with you rather than react to spectacle.

Then, context—both temporal and textual. Short videos and photos are especially vulnerable to misinterpretation. In Question 4, a seven-second clip “proved” a confession by cropping mid-sentence. In Question 18, a photo from before an event tried to stand in for turnout during the event. Your rule of thumb: if the claim depends on a tiny slice of time, look for the entire slice. Ask for full recordings, transcripts, metadata, and corroborating angles. In your own analysis, model the behavior you want to see: acknowledge what the clip shows, then explain what additional context is necessary for a fair judgment.

Distinguish between fake and misleading. Fake content fabricates reality: impostor domains, recycled photos labeled as new, invented institutions, or impossible scientific claims. Question 2’s near-match URL and Question 11’s repurposed protest photo are classic fakes. Misleading content, by contrast, starts with something real and bends it—omits denominators, hides caveats, confuses committee votes with final passage, or overreads a statute (Questions 14, 19, and 16). Why does the distinction matter? Because the remedy differs. Fakes need debunking and source warnings; misleading items benefit from added context and careful rewording that corrects without overcorrecting.

Evaluate claims by their evidence burden. Extraordinary claims—miracle cures, cosmic predictions—require extraordinary evidence: peer-reviewed studies, multiple expert confirmations, detailed methods. Question 10’s “vitamin cures arthritis” is a perfect example: big claim, zero trials, direct financial incentive. In your own writing, match the strength of your language to the strength of your proof. “Suggests,” “is associated with,” and “early evidence indicates” are not signs of weakness; they signal intellectual honesty. This linguistic precision earns trust over time.

Sampling and generalization deserve special attention. Question 17 confronted a self-selected poll being used to allege election theft. A simple, powerful question dissolves the illusion: “Who was sampled and how?” Random, representative sampling justifies generalization; comment-box polls do not. When you practice persuasive English, bring the audience with you by defining the technical terms—margin of error, confidence interval, representative sample—in everyday language. Doing so not only clarifies the logic, it models respectful teaching rather than gotcha rhetoric.

Be alert to motive and incentives—but don’t stop there. A site that sells a product it praises (Question 10) deserves extra scrutiny, yet bias alone doesn’t prove falsehood. Your goal is to pair motive analysis with evidence analysis: identify the incentive and then ask, “Does the evidence still stand?” This two-step approach keeps you fair and focused. It also helps you avoid the ad hominem fallacy, where you attack the speaker instead of the claim.

Language cues are subtle but telling. Reliable reporting often includes hedging verbs, explicit limitations, and links. Misleading or fake pieces lean on absolutes (“prove,” “always,” “cures”), alarm bells (“BREAKING,” “secret plan”), and emotionally loaded framing designed to bypass analysis. But language is only a clue. Combine it with the other checks—traceability, proportionality, context, and sampling—to avoid both credulity and cynicism.

Technical tools amplify your judgment. Reverse image search can expose recycled photos (Question 11). Checking the Internet Archive or WHOIS records can reveal domain impersonation (Question 2). Reading the primary law or report can deflate sensational claims built on misread documents (Question 16). Even when you don’t have the tools at hand, knowing they exist helps you form the right questions and resist instant reactions.

When you correct others—or write your own analyses—aim for clarity without condescension. Good corrections do three things: state what’s true, explain why the earlier claim was wrong, and offer a better way to frame the issue next time. For instance: “This chart shows a drop, but because the y-axis starts at 45%, it exaggerates the change. Using a zero baseline or per-capita rates gives a more accurate picture.” Notice the tone: it invites thinking and preserves dignity. That tone makes people more likely to update their views.

Finally, cultivate intellectual posture: humility plus persistence. Humility says, “I might be missing something; let me check.” Persistence says, “I won’t let a confident headline make decisions for me.” Together they form your media-literacy spine. In practice, that means pausing before sharing, skimming for links, clicking through, and, when necessary, withholding judgment until you can verify. It also means being comfortable with provisional conclusions: “According to the city’s 2024 report and two named experts, air quality has improved, though we should watch next year’s data to confirm the trend.”

To make this practical, try a three-step checklist in daily reading. Step 1—Trace: Who said it, and can I click the source? Step 2—Test: Does the presentation (visuals, wording, sample) fairly reflect the evidence? Step 3—Translate: How would I restate this claim with appropriate strength and context? If a headline says, “Study proves,” your translation might be, “A preprint suggests a correlation, pending peer review and replication.” That single sentence shows your audience you understand both the promise and the limits of new information.

Media literacy isn’t about becoming cynical; it’s about becoming skillfully curious. With the habits you just practiced—checking sources, inspecting visuals, demanding context, weighing evidence proportionally, and communicating your reasoning with care—you won’t just spot fakes and distortions; you’ll also recognize and elevate good information. In a noisy world, that’s not only self-defense—it’s a public service.

0 Comments