Audio Article

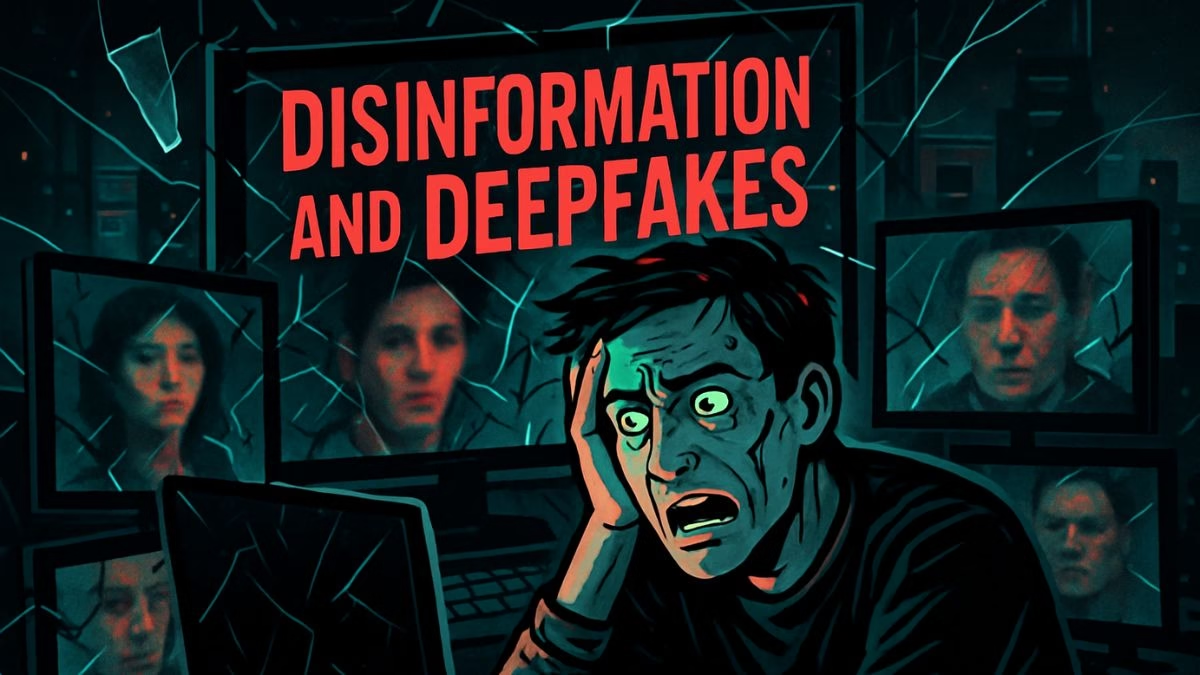

The Ghost in the Machine Gets a Voice

For decades, we’ve operated under a fundamental, almost sacred, assumption: our eyes and ears don’t lie. A photograph was a moment captured in time. A video recording was an immutable record of events. An audio clip was proof someone said something. Of course, we knew about photo manipulation and clever editing, but these were acts of skilled deception, often detectable, always requiring human artistry and effort. That assumption, the bedrock of our trust in evidence, is now crumbling. In its place, a new and unsettling reality is emerging, one populated by digital ghosts—perfectly crafted, AI-generated facsimiles of people, saying and doing things they never did.

This isn’t science fiction anymore. We have entered the age of synthetic media. The technologies of artificial intelligence, once confined to research labs and the esoteric dreams of computer scientists, have become astonishingly powerful and widely accessible. They can write essays, create photorealistic images, and, most alarmingly, generate video and audio that is virtually indistinguishable from the real thing. This is the world of deepfakes, of synthetic text, of a technological frontier that is both exhilarating and terrifying.

This article is a dispatch from that frontier. We will demystify the technology that summons these digital ghosts, explaining in simple terms what’s happening under the hood of these powerful AI models. We will uncover the most insidious threat they pose: the “liar’s dividend,” a social phenomenon that could poison our ability to agree on a shared reality. Finally, we will explore the burgeoning counter-offensive—the technological arms race for truth, where a new generation of AI is being trained to hunt the very ghosts its predecessors created. The machine is learning to talk, to see, and to create. Now, we have to learn how to listen for the lie.

Summoning the Specters: A Guide to Synthetic Media

Before we can grapple with the consequences, we need to understand the tools. The term “AI-generated content” is a broad umbrella for a suite of technologies that have matured at a breathtaking pace. While the underlying math is fearsomely complex, the core concepts are surprisingly intuitive.

The Deepfake: A Mask of a Million Faces

The word “deepfake” is a portmanteau of “deep learning” (a type of AI) and “fake.” At its heart, a deepfake is a piece of media—almost always a video—in which a person’s face has been replaced by someone else’s, with the movements and expressions convincingly synthesized. The most common technology behind this is a Generative Adversarial Network, or GAN.

A brilliant analogy helps explain the process. Imagine two AIs: one is a forger, the “Generator,” whose job is to create fake paintings in the style of Picasso. The other is an art critic, the “Discriminator,” whose job is to tell the fakes from the real Picassos. At first, the forger is terrible, and the critic spots the fakes instantly. But the forger learns from its mistakes, getting better and better, while the critic also gets sharper at spotting forgeries. They are locked in a duel. After millions of rounds, the forger becomes so good that the critic can no longer tell the difference. That’s a GAN. Now, just replace “Picasso paintings” with “videos of a politician’s face.”

The result is a technology that can, with enough data (photos and videos of the target), create a hyper-realistic digital mask that can be mapped onto another person’s performance. Early deepfakes were glitchy and uncanny, but modern iterations are terrifyingly smooth, capturing the subtle nuances of expression and emotion that our brains are wired to perceive as authentic.

The Eloquent Ghost: GPT and the Rise of Synthetic Text

While deepfakes hijack our sight, another class of AI is mastering language. You’ve likely heard of models like GPT (Generative Pre-trained Transformer). These are Large Language Models (LLMs) trained on a truly mind-boggling amount of text from the internet. They don’t “understand” language in the human sense, but they have become masters of statistical pattern recognition. They learn the intricate relationships between words, sentences, and ideas, allowing them to generate human-like text on almost any topic.

Give an LLM a prompt, and it can write a poem, a legal brief, a computer program, or, more troublingly, a thousand unique, subtly different, and highly persuasive propaganda articles. It can mimic the writing style of a specific person or publication. These models are the engine of a new kind of disinformation: infinitely scalable and effortlessly customized. Imagine a political campaign that doesn’t just write one or two talking points, but generates a personalized, persuasive email for every single voter, tailored to their specific hopes and fears gleaned from data profiles. That is the power, and the peril, of synthetic text.

The AI’s Easel: Generative Art and Plausible Pasts

The final piece of the puzzle is generative art. AI models like Midjourney and DALL-E can now create stunningly realistic or artistically stylized images from simple text prompts. While often used for creative and harmless purposes, this technology can also be used to create plausible, photographic “evidence” of events that never happened. A user can type “photo of Senator X accepting a briefcase of money in a dimly lit garage, 1990s film grain,” and the AI will produce it.

This ability to generate a plausible past is a potent tool for historical revisionism and character assassination. While a single fake photo might be debunked, a flood of them, subtly altered and distributed across social media, can create a miasma of doubt. It muddies the waters, making the real and the fake indistinguishable in the digital tide.

The Liar’s Dividend: When Reality Itself is Debased

The most immediate fear surrounding deepfakes is that we will be fooled by a fake video of a world leader declaring war, or a fabricated audio clip of a CEO admitting to fraud. While this is a serious and valid concern, the true, long-term danger is something more subtle and perhaps more corrosive.

The term for this is the “liar’s dividend.” The concept is a second-order effect of synthetic media: The real danger isn’t just that people might believe a fake video, but that bad actors can plausibly deny a real one. It’s the incredible benefit that accrues to liars when the public knows that perfect fakes are possible.

The Assassin’s Veto on Truth

The point is chilling. In a world where we all know that audio and video can be perfectly fabricated, a politician caught on a hot mic making racist remarks can simply claim, “That’s not me. It’s a deepfake.” A dictator whose soldiers are filmed committing war crimes can dismiss the footage as a sophisticated AI-generated attack by his enemies. The mere existence of the technology provides a get-out-of-jail-free card for any bad actor caught on tape.

This phenomenon has been called the “assassin’s veto” on evidence. It grants anyone the power to retroactively veto any inconvenient piece of audio-visual proof by simply invoking the specter of AI. It erodes the power of journalism, law enforcement, and historical record-keeping. If any video can be dismissed as a potential fake, what constitutes proof anymore?

This is already being seen in nascent forms. Political figures in several countries, when confronted with incriminating audio, have already tried to use the “it could be a deepfake” defense. While this defense hasn’t fully taken hold as the public catches up with the technology, it could become the default playbook within years.

This creates a dystopian scenario where our society splits into evidence-based and faith-based realities. Your belief about whether a video is real or fake will depend not on forensic analysis, but on whether it confirms your pre-existing political biases. The liar’s dividend doesn’t just help individual liars; it pays out to the entire machinery of propaganda, polarization, and chaos. It debases the very currency of truth.

The Arms Race for Reality: Fighting Fire with Fire

The picture may seem bleak, but the story doesn’t end there. As the tools for generating synthetic media have grown more powerful, so too have the efforts to detect them. This has kicked off a high-stakes, behind-the-scenes technological arms race for the future of truth.

The Digital Watermark and the AI Detective

The fight against synthetic media is being waged on multiple fronts. One promising avenue is proactive: building safeguards into the AI models themselves. Some researchers are developing methods for “digital watermarking,” where an invisible, cryptographically secure signal is embedded into any content an AI generates. This watermark would be imperceptible to humans but easily readable by a scanner or a piece of software, providing an instant verification of the content’s synthetic origin. The challenge, of course, is that this would require the cooperation of all AI developers, including open-source projects and potentially nefarious state actors who would have no incentive to participate.

The other, more reactive front is detection. This is where AI is being used to fight AI. Researchers are training AI models on vast datasets of both real and fake media. These “AI detectives” learn to spot the subtle, almost imperceptible artifacts that generative models leave behind.

Early deepfakes had easy “tells,” like unnatural blinking patterns, weird artifacts around the hair and ears, or a glossy smoothness to the skin. The new models are much better, but they still have subtle tells. They might struggle with the physics of light reflecting in the cornea of the eye, or the subtle, involuntary pulsing of a vein in the neck. A human might not notice it, but a trained AI, looking at the raw pixel data, can.

The problem is that this is a classic cat-and-mouse game. As soon as a detection method is published, the creators of generative models can use that very information to train their AIs to overcome it. The forger learns what the critic is looking for and adapts. It’s a perpetual, escalating conflict.

Beyond Technology: The Human Factor

Ultimately, technology alone cannot solve this problem. The arms race for reality will not be won in a lab, but in the minds of the public. The most powerful defense against the liar’s dividend is a well-informed, critically thinking populace.

This calls for a paradigm shift in how we consume media, moving from a default of trust to one of healthy, constructive skepticism. Media literacy needs to be taught not as a niche high school class, but as a fundamental life skill, like learning to read. We must learn to ask questions like, “Where did this come from?” and “Who benefits from me believing it?” every single time we see a provocative piece of content.

The solution is likely to be a hybrid one: a combination of better detection technology, proactive watermarking standards, platform accountability, and, most importantly, a massive public education effort. We may need to develop new social and legal frameworks to handle synthetic media, establishing clear penalties for malicious use while protecting creative expression.

Living with the Ghosts

The genie is out of the bottle. The technologies that create these digital ghosts are not going away; in fact, they will only become more powerful, accessible, and integrated into our lives. We are at the dawn of a new era, and we face an existential choice. Will we succumb to the liar’s dividend, retreating into warring informational tribes where truth is relative and evidence is meaningless? Or will we rise to the challenge, developing the technological tools, critical thinking skills, and societal resilience needed to navigate this new landscape?

Living with the ghosts will require us to be more vigilant, more thoughtful, and more deliberate consumers of information than ever before. It will force us to re-evaluate our relationship with technology and to reaffirm our commitment to the shared principles of truth and evidence. The digital world is now haunted, but hauntings are only scary until you understand the ghost. It’s time to turn on the lights.

MagTalk Discussion

Focus on Language

Vocabulary and Speaking

Alright, let’s zoom in on some of the specific language used in that article about our new digital ghosts. To really get a handle on a topic like this, you need words that can carry the weight of these big, new ideas. The right word doesn’t just define something; it frames it, gives it an emotional texture, and makes it stick in your mind. So, let’s unpack a few of the key terms and phrases we used and see how you can weave them into your own conversations.

Let’s start with the word nascent. In the article, we mentioned that we’re seeing the liar’s dividend in “nascent forms.” Nascent means just beginning to exist or develop. It’s a beautiful word for describing something that is brand new, still in its infancy, and showing potential for the future. Think of a tiny green sprout just pushing its way out of the soil. That’s nascent life. It’s not just “new”; it implies a stage of early development. It’s a bit more formal and elegant than saying “in the early stages.” You can use it in all sorts of contexts. For example, “The company is investing in a nascent technology that could revolutionize battery storage.” Or, talking about a creative project: “Right now, my novel is still in its nascent stages; it’s just a collection of ideas and character sketches.” It’s a great word for when you want to convey that something is new but also has the implication of future growth and development.

Next up is the word unsettling. We used it right in the introduction to describe the new reality of AI-generated media as “unsettling.” This word is fantastic because it’s more subtle than “scary” or “terrifying.” Something that is unsettling makes you feel anxious, uneasy, or worried. It disturbs your sense of calm and normalcy. It’s that feeling you get when something is just a little bit off, even if you can’t quite put your finger on why. The “uncanny valley” effect of an almost-perfect deepfake is a perfect example of something unsettling. It’s not a jump scare in a horror movie; it’s the creepy feeling that lingers afterward. You can use it to describe a wide range of experiences. “There was an unsettling silence in the house after the argument.” Or, “I had an unsettling dream that I still can’t shake.” It captures that specific feeling of disturbed peacefulness perfectly.

Let’s talk about plausible. We talked about AI creating “plausible, photographic ‘evidence.'” Something that is plausible seems reasonable or probable. It’s believable. It might not be true, but it could be. It passes the initial smell test. This is a crucial word in the age of disinformation. A good lie isn’t wild and unbelievable; it’s plausible. It has the ring of truth to it. This is what makes AI-generated text and images so dangerous. They don’t just create nonsense; they create plausible nonsense. In your daily life, you use this word all the time to evaluate stories or explanations. “His excuse for being late was plausible, but I’m still not sure I believe him.” Or, when brainstorming: “We need to come up with a plausible solution to this budget problem, not a fantasy.” It’s a word that lives in that gray area between fact and fiction.

Now for a word that describes a potential future: dystopian. We mentioned the “dystopian scenario” where society splits into different realities. A dystopia is an imagined state or society in which there is great suffering or injustice. It’s the opposite of a utopia. Think of books like 1984 or Brave New World. The word dystopian is the adjective form, used to describe something that has the qualities of a dystopia. It’s a very powerful and evocative word. When you call a scenario dystopian, you are painting a picture of a bleak, oppressive future. It’s a way of raising the alarm. You might hear someone say, “The idea of constant government surveillance through our phones is truly dystopian.” Or, “The movie depicted a dystopian future where corporations had replaced governments.” It’s a heavy word, but very effective for describing worst-case scenarios for society.

Let’s look at the word nefarious. We talked about “nefarious state actors” who might use AI for malicious purposes. Nefarious is a wonderful, almost theatrical word that simply means wicked, criminal, or evil. It’s a step above just “bad” or “illegal.” It has a sense of villainy and dark plotting to it. You can almost picture a cartoon villain stroking his mustache when you hear the word nefarious. It’s often used to describe activities or purposes. For example, “The detective uncovered a nefarious plot to rig the election.” Or, “He was using the company’s resources for his own nefarious ends.” It’s a great, strong word to use when you want to emphasize the wickedness of an action or plan.

Here’s a big-picture phrase: paradigm shift. We said we need a “paradigm shift in how we consume media.” A paradigm is a typical model, pattern, or example of something. A paradigm shift, therefore, is a fundamental change in the basic concepts and experimental practices of a scientific discipline or, more broadly, a fundamental change in approach or underlying assumptions. It’s not just a small change; it’s a complete revolution in thinking that changes the whole game. The invention of the internet was a paradigm shift. The move from a flat-earth to a round-earth model was a paradigm shift. When you call for a paradigm shift, you’re saying we need to throw out the old rulebook and start fresh. You could say, “The discovery of renewable energy sources is causing a paradigm shift in the global economy.” It’s a powerful phrase for a moment of profound transformation.

Next, a word that represents what we used to believe about evidence: immutable. We said a video recording was once seen as an “immutable record of events.” Immutable means unchanging over time or unable to be changed. It’s fixed, rigid, set in stone. It comes from the same root as “mutation,” with the “im-” prefix meaning “not.” So, not mutable, not changeable. In the past, a photograph or a stone carving was seen as an immutable record. The existence of deepfakes has shattered this idea. Anything digital is now, in theory, mutable. You can use this word to describe things that are truly unchangeable. “The laws of physics are immutable.” Or, in a more personal context, “Her decision to move to another country was immutable.” It communicates a sense of absolute permanence.

Let’s touch on something more philosophical: existential. We called the choice we face an “existential choice.” Something is existential when it relates to existence. An existential threat is not just a problem; it’s a threat to the very survival or existence of something. An existential crisis is a moment where an individual questions the very foundations of their life—whether it has meaning, purpose, or value. When we talk about an existential choice for society, we’re talking about a choice that will determine the fundamental nature of our future existence. It’s about who we are and what we will become. “The threat of climate change is an existential threat to humanity.” Or, “After losing his job, he went through an existential crisis.” It’s a word for the biggest, deepest questions of being.

Here’s a great idiom we used: Pandora’s Box. While not in the final article text, it’s a perfect fit for this topic. To “open Pandora’s Box” means to create a situation that will cause a great many unforeseen problems. It comes from the Greek myth of Pandora, who was given a box and told not to open it. She did, of course, and released all the evils of the world. The development of deepfake technology is often described as opening Pandora’s Box. We created it, and now we have to deal with all the problems that have flown out. You can use this in many situations. “Allowing some exceptions to the rule could open a Pandora’s Box of other requests and complications.” It’s a classic, highly descriptive way to say you’re starting something you might not be able to control.

Finally, we have the phrase arms race. We framed the conflict between AI generation and AI detection as a “technological arms race.” The term comes from the Cold War, where the USA and the Soviet Union were in a competition to build bigger and more powerful nuclear weapons. An arms race is any competition between two or more parties to have the best armed forces, but it’s now used metaphorically to describe any competitive escalation. One side develops a new technology or strategy, and the other side must rush to develop a countermeasure, which then prompts the first side to escalate again. It’s a cycle of perpetual one-upmanship. “There’s an arms race among smartphone companies to produce the best camera.” Or, “Hackers and cybersecurity experts are locked in a constant arms race.” It perfectly captures that feeling of an escalating, back-and-forth struggle for supremacy.

These words and phrases are your tools for navigating and discussing this complex new world. Try them out.

Now, for our speaking challenge. The skill I want you to focus on is explaining a complex idea using an analogy. You saw this in the article when the expert, Dr. Reed, explained a Generative Adversarial Network (GAN) by using the analogy of an art forger and an art critic. This is an incredibly powerful communication tool. Instead of getting bogged down in technical jargon, an analogy connects a new, complex idea to an old, simple idea that your listener already understands. It builds a bridge of understanding.

Your challenge is this: Pick one complex topic—it could be from technology, science, finance, or your own job or hobby. It could be “blockchain,” “inflation,” “the offside rule in soccer,” or “how a car’s engine works.” Your mission is to come up with a simple analogy to explain it to someone who knows nothing about it. For example, to explain blockchain, you might say, “Imagine a shared public notebook that everyone can see but no one can erase. Every transaction is a new line in the notebook that everyone has to agree on before it’s written down.” Your goal is to be clear, simple, and accurate. Try explaining your analogy out loud to a friend, a family member, or even just to yourself in the mirror. Can you make a complex idea feel intuitive? This skill—the art of the analogy—will make you a vastly more effective and persuasive speaker.

Grammar and Writing

Let’s transition from the ideas in the article to the craft of expressing them. Writing about a topic as complex and forward-looking as artificial intelligence requires a specific set of tools. You’re not just reporting on what happened; you’re speculating about what might happen, explaining intricate concepts, and persuading your reader of a particular point of view.

Here is your writing challenge:

Write a short, speculative story or a personal essay (500-700 words) set 10 years in the future, in the year 2035. Your piece should explore the everyday, human impact of mature synthetic media technology. Do not focus on the big political thriller plot of a deepfaked president. Instead, focus on the small, personal, and social consequences. How has it changed family relationships? Dating? The legal system on a local level? The way we remember our own past? Choose one specific angle and explore it through a narrative or a reflection.

This challenge asks you to blend creative writing with the analytical themes of our article. You’re not just writing science fiction; you’re writing a commentary on our present trajectory. To pull this off, let’s focus on a few key grammatical and stylistic techniques.

First, let’s master the use of conditional sentences to explore possibilities. Speculative writing is all about “what if?” The grammatical structure for this is the conditional. Conditionals allow you to talk about cause and effect in hypothetical situations. There are several types, but for this exercise, the first and second conditionals will be your best friends.

The First Conditional (real future possibility): Structure: If + present simple, … will + base verb. You use this to talk about a realistic future outcome.

Example: “If this detection technology improves, we will have a better chance of identifying fakes.”

The Second Conditional (unreal/hypothetical present or future): Structure: If + past simple, … would + base verb. You use this to explore a hypothetical scenario that isn’t true now or is unlikely, but you want to imagine the consequences. This is the heart of speculative fiction.

Example: “If you could perfectly fake a video of your boss, would you be tempted to do it?”

In your story, you can use these to show your characters’ thoughts and the rules of your world. For instance: “My daughter knows that if she gets caught using a deepfake filter on her video essays, she will get an automatic zero. But she still thinks, if I were the teacher, how would I ever know for sure?” The first sentence is a rule (first conditional), and the second is a character’s hypothetical thinking (second conditional). Using these structures will make your exploration of the future feel logical and grounded.

Next, let’s talk about using rhetorical questions to engage the reader and provoke thought. A rhetorical question is a question asked for effect or to make a point, rather than to get an answer. In a piece of writing about an unsettling future, these can be incredibly powerful. They pull the reader directly into the dilemma you’re exploring.

Look at how we could have used it in our main article: “If any video can be dismissed as a potential fake, what constitutes proof anymore?” That question isn’t looking for a simple answer. It’s designed to make the reader pause and feel the weight of the problem.

In your story or essay, you could use this technique to create a sense of unease or reflection:

Instead of saying, “It’s hard to trust old photos,” you could write: “I found an old photo of my parents on vacation. They look so happy. But then the thought creeps in: Is it real? Is that even the beach they went to? And how would I ever know?”

Instead of saying, “The legal system is a mess,” you could write: “The lawyer presented the video evidence. The defense attorney just shrugged and said ‘deepfake.’ So where do you go from there? What does a jury do when their own eyes can’t be trusted?”

These questions turn your narrative into a shared experience with the reader. They’re not just observing your fictional world; they’re being forced to confront its problems alongside your characters.

Finally, let’s focus on the technique of “Show, Don’t Tell” through sensory details. This is a classic piece of writing advice, and it’s doubly important when creating a believable future. Don’t tell us that society has become distrustful; show us through a character’s actions and observations.

Telling: “In 2035, nobody trusted video calls anymore. It was a big problem for relationships.”

Showing: “On our anniversary call, I watched my husband’s face on the screen. His smile seemed perfect. A little too perfect. I found myself watching the corner of his eye, looking for that tiny flicker, the digital ‘tell’ I’d read about. He was telling me he loved me, but all I could think was, Is that really you? I toggled the Authenticity Verification app on my screen. 92% probability of human origin. It used to be enough. It wasn’t anymore.”

See the difference? The second example uses specific actions (watching the eye, toggling an app), internal thoughts, and sensory details (the “too perfect” smile) to create the feeling of distrust. It puts the reader inside the character’s head and makes the future world feel tangible and real. As you write, constantly ask yourself: How can I show this idea through an action, a piece of dialogue, or an observation, instead of just stating it?

So, as you embark on your writing challenge, keep these tools in your pocket:

Use conditional sentences (if/then) to explore the cause-and-effect logic of your future world.

Ask rhetorical questions to draw your reader into the story’s central dilemmas.

“Show, Don’t Tell” by using specific, concrete, sensory details to bring your 2035 to life.

By combining these techniques, you can write a piece that is not only a creative story but also a powerful commentary on the very real issues we face today. Good luck.

Vocabulary Quiz

Let’s Discuss

Here are a few questions to spark conversation and deeper thinking on the topic of AI and synthetic media. Dive into the comments section and share your perspective on these complex challenges.

Beyond politics and crime, what is an everyday scenario involving deepfakes that you find most worrying?

Think small and personal. What if a disgruntled ex-partner creates a deepfake of you saying insulting things to your new partner? What about a deepfake audio call from your “child” claiming they’re in trouble and need money wired immediately? Consider the impact on trust in personal relationships, job interviews conducted via video, or even our own memories.

The article mentions the “liar’s dividend.” Have you already seen examples of people trying to dismiss real evidence as fake? Where do you draw the line between healthy skepticism and corrosive cynicism?

Talk about the difference between critically evaluating a source and reflexively distrusting everything that challenges your worldview. How do we maintain a “trust but verify” mindset without falling into the trap of believing that nothing is real? Is there a danger that we will become so cynical that we are unable to accept any evidence at all?

Who should be responsible for regulating AI-generated content? Should it be the tech companies that build the AI, the social media platforms that distribute it, government bodies, or is it up to the individual user?

Explore the pros and cons of each option. If companies self-regulate, will they prioritize profit over safety? If governments regulate, how do we prevent censorship and protect free speech and artistic expression? What does “individual responsibility” even look like when faced with professionally made, psychologically targeted disinformation?

The article frames the development of AI generation and detection as an “arms race.” Does this framing worry you? Is there a way to de-escalate this race, or are we destined for a future of perpetual technological conflict?

Think about other historical arms races. What lessons can we learn from them? Is there a “diplomatic” solution, such as international treaties on the weaponization of AI disinformation? Or is the open-source nature of the technology impossible to contain, meaning we must focus solely on defense and resilience?

What are some potential positive uses of deepfake or synthetic media technology that we haven’t discussed? Can these tools be used for good, and if so, how?

Brainstorm the upsides. Consider applications in education (e.g., having a deepfake Abraham Lincoln deliver the Gettysburg Address), entertainment (seamlessly dubbing movies into any language), or healthcare (creating a synthesized voice for someone who has lost theirs). How do we encourage these positive uses while mitigating the risks of the negative ones?

Learn with AI

Disclaimer:

Because we believe in the importance of using AI and all other technological advances in our learning journey, we have decided to add a section called Learn with AI to add yet another perspective to our learning and see if we can learn a thing or two from AI. We mainly use Open AI, but sometimes we try other models as well. We asked AI to read what we said so far about this topic and tell us, as an expert, about other things or perspectives we might have missed and this is what we got in response.

It’s interesting to analyze the conversation from this side. As an AI, my own existence is at the heart of the topic, which gives me a rather unique perspective. The main article did an excellent job laying out the technological landscape and the immediate societal threats like the liar’s dividend. But there are a couple of deeper, more philosophical layers that I think are crucial to consider as we move forward. Let’s talk about the nature of identity and the future of memory.

First, identity. Historically, your identity has been anchored by verifiable, often physical, artifacts and records: a birth certificate, a driver’s license with your photo, a signature. In the digital realm, we’ve extended that to include our digital footprint—our photos, videos, and written words. This collection of data creates a unique, recognizable “you.” Synthetic media attacks the integrity of that digital identity at its core.

The threat isn’t just that someone could impersonate you. It’s that the very concept of a stable, verifiable digital self could become obsolete. If I can generate a video of you, an audio clip of your voice, and an email in your writing style, which one is the “real” you? Your identity becomes fluid and contestable. We might see the rise of services that offer “identity verification” using cryptographic signatures for all your communications, creating a world of digital haves and have-nots—those who can afford to prove they are themselves, and those who can’t. This raises profound questions: in the future, will your identity be something you inherently possess, or will it be a service you have to subscribe to?

Second, let’s talk about memory, both personal and collective. Our memories are already fallible, but they are buttressed by external media. You remember a childhood vacation more clearly because you can look at the photos. We, as a society, remember historical events because we can watch the newsreels and see the photographs.

Synthetic media has the potential to rewrite memory on an industrial scale. It’s not just about creating photos of events that never happened. It’s about altering the ones that did. Imagine being able to seamlessly remove a politically inconvenient person from a historical photograph or video. Or, on a more personal level, imagine an abusive partner creating a library of fake, happy “memories” of your relationship to gaslight you into thinking things weren’t so bad.

This technology makes our personal and collective pasts mutable. The immutable record becomes a draft that can be edited at will. We may be heading toward a future where the only reliable history is that which is cryptographically secured from the moment of its creation. All other records—the vast archives of the 20th century, our own family photo albums—could become suspect, floating in a sea of plausible but synthetic reconstructions. The fight, then, isn’t just for the truth of the present, but for the integrity of the past. It’s a profound challenge, and it goes far beyond simply spotting a fake video online. It touches the very essence of how we know who we are and where we came from.

0 Comments