- Audio Article

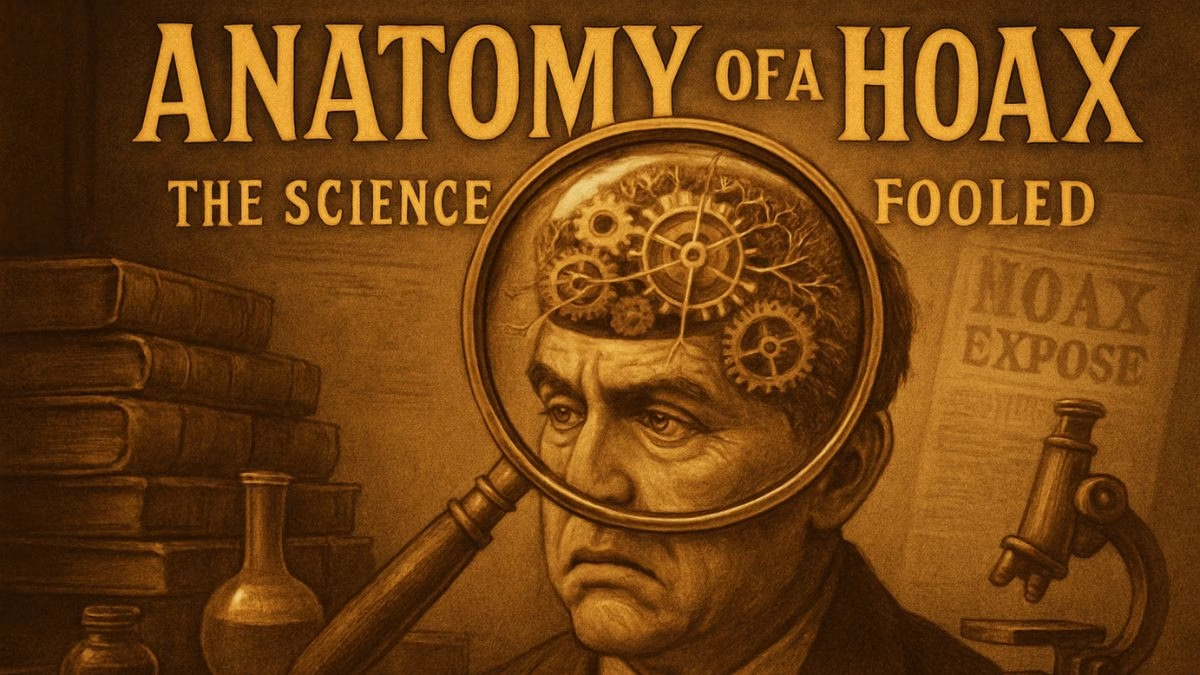

- The Nigerian Prince in Your Inbox Got an Upgrade

- The Brain on Autopilot: Our Cognitive Shortcuts

- Pulling the Heartstrings: The Emotional Architecture of a Hoax

- What Cognitive Psychology Tells Us

- Forging Your Mental Toolkit: How to Spot a Hoax in the Wild

- From Unwitting Accomplice to Critical Thinker

- MagTalk Discussion

- Focus on Language

- Vocabulary Quiz

- Let’s Discuss

- Learn with AI

- Let’s Play & Learn

Audio Article

The Nigerian Prince in Your Inbox Got an Upgrade

Remember him? The deposed Nigerian prince, bless his heart, who just needed your bank account to transfer his millions, promising you a hefty cut? We laugh about it now, a relic of a more naive internet era. Yet, if we’re being honest, his descendants are thriving. They’ve traded their royal titles for lab coats, whistleblower badges, and “concerned parent” personas. They’re not just in our spam folders anymore; they’re in our newsfeeds, our group chats, and our minds. They’re peddling miracle cures made from kitchen spices, whispering about secret government cabals revealed on grainy YouTube videos, and sharing “BREAKING NEWS” from websites that look suspiciously like they were designed during a high school web-design class in 1998.

And we fall for it. Maybe not you, not this time. But your aunt did. Your old college roommate did. And maybe, just maybe, there was that one headline that made you pause, that made your heart beat a little faster, and your thumb hover over the ‘share’ button before a sliver of doubt crept in.

Why? In an age with the entirety of human knowledge at our fingertips, why are we so profoundly susceptible to being fooled? Are we just getting dumber? The comforting, and far more accurate, answer is no. The truth is far more complex and fascinating. We are not being duped because of a lack of intelligence, but because of how our intelligence is wired. The very mental machinery that helps us navigate a complex world—our shortcuts, our instincts, our emotional responses—are the same tools that skilled manipulators and unintentional misinformers exploit. This isn’t a story about gullibility; it’s a story about the architecture of the human mind.

To understand the anatomy of a modern hoax, we need to look beyond the content of the lie and focus on the container it’s delivered in: our own brain. With the guidance of cognitive psychology, we can pop the hood on our own thought processes, identify the vulnerabilities, and build a mental toolkit to become more discerning, resilient consumers of information in an age saturated with it.

The Brain on Autopilot: Our Cognitive Shortcuts

Our brains are fundamentally lazy. This isn’t an insult; it’s a design feature born of evolutionary necessity. Processing every single piece of information from scratch would be exhausting and inefficient. To cope, the brain develops what psychologists call heuristics—mental shortcuts that allow us to make rapid judgments and decisions. They’re the reason you can drive a familiar route while thinking about your grocery list, or instantly feel uneasy in a dark alley. Most of the time, these shortcuts serve us well. But in the digital jungle of information, they can lead us straight into a trap.

Heuristics: The Good, The Bad, and The Deceptive

Think of heuristics as your brain’s “rule of thumb” software. One of the most powerful is the availability heuristic. This rule dictates that if something can be recalled easily, it must be more important or more common than things that are harder to recall. It’s why people are often more afraid of shark attacks (vivid, sensational media coverage) than of falling coconuts (statistically more dangerous, but decidedly less cinematic). Hoaxes are designed for availability. They use shocking images, emotionally charged language, and unbelievable claims that stick in your mind. The more you see a particular piece of misinformation—say, a false claim about a celebrity’s death—the more “available” it becomes in your memory, lending it an undeserved sense of credibility.

Then there’s the king of all cognitive biases: confirmation bias. We are not neutral seekers of truth; we are validation-seeking machines. We instinctively favor information that confirms our existing beliefs and ignore or discredit information that challenges them. A hoax that aligns with your political worldview or your suspicions about a certain institution doesn’t feel like a hoax. It feels like vindication. It’s the “I knew it!” moment that provides a satisfying dopamine hit, making us eager to believe and even more eager to share. The creators of disinformation know this. They don’t create stories for everyone; they tailor them for specific audiences, knowing their confirmation bias will do most of the heavy lifting.

The Allure of Simplicity: Why We Crave Easy Answers

The world is a messy, complicated, and often frustratingly nuanced place. A global pandemic isn’t caused by a single evil mastermind; it’s a complex interplay of virology, public policy, human behavior, and socioeconomic factors. Geopolitical conflicts are rooted in centuries of history and intricate cultural dynamics. But who has time for that?

Hoaxes offer a seductive alternative: a simple story. They provide a clear villain, a straightforward motive, and an easy-to-understand (though utterly wrong) explanation. This isn’t just about being lazy; it’s about a deep-seated psychological need for cognitive closure. Uncertainty is uncomfortable. A simple lie can feel more comforting than a complex truth. It’s far easier to believe that a secret cabal controls the world than to grapple with the chaotic, often random nature of global events. The hoax provides a narrative that, however false, makes the world feel a little more manageable and a lot less scary.

Pulling the Heartstrings: The Emotional Architecture of a Hoax

If cognitive shortcuts are the unlocked door, our emotions are the welcome mat. The most successful hoaxes don’t just appeal to our logic (or lack thereof); they bypass it entirely by targeting our feelings. As the saying in newsrooms goes, “If it bleeds, it leads.” In the digital world, it’s more like, “If it enrages, it engages.”

Fear and Outrage: The Rocket Fuel of Virality

Neuroscience shows that high-arousal emotions—like fear, anger, and outrage—are particularly effective at triggering the impulse to share information. When we are angry or afraid, our prefrontal cortex, the part of our brain responsible for critical thinking and impulse control, takes a backseat. The amygdala, our emotional command center, takes the wheel. This state of “amygdala hijack” is precisely what purveyors of disinformation aim for.

A post about a supposed new policy that will harm your family, a story about a vulnerable group being mistreated, or a warning about a hidden danger in your food—these are designed to make you feel before you think. The urge to warn your loved ones, to spread the alarm, becomes overwhelming. In that moment, you’re not a critical thinker; you’re a protector, a sentinel. And the ‘share’ button is your warning bell. The veracity of the information becomes secondary to the urgency of the emotion it provokes.

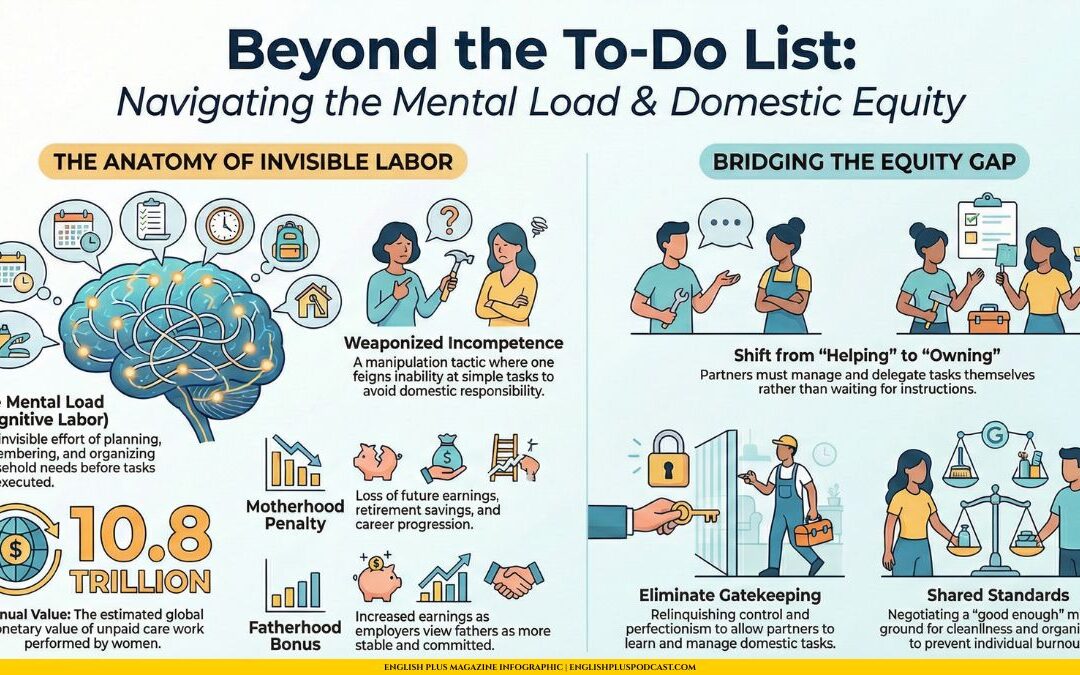

Us vs. Them: The Tribal Instinct in the Digital Age

One of the most potent emotional triggers is our innate need to belong. We are tribal creatures, hardwired to form in-groups and be suspicious of out-groups. Modern hoaxes have become masters of exploiting this. They frame issues not as complex policy debates, but as existential battles between “us” (the good, enlightened people who see the truth) and “them” (the corrupt, evil, or ignorant masses).

Sharing a piece of disinformation that attacks the “other side” isn’t just about the information itself; it’s a social act. It signals your allegiance to your tribe. It’s a digital war cry that says, “I am one of you. I believe what you believe. We are in this together.” This social cohesion is incredibly powerful and makes it difficult for facts to penetrate. To question the hoax is not just to question a piece of data; it’s to risk being ostracized from your community.

What Cognitive Psychology Tells Us

Cognitive psychology offers clear explanations for why we’re so susceptible to misinformation, framing it not as a moral or intellectual failing, but as a byproduct of how our brains are wired. Our minds are optimized for social survival and efficiency, not for meticulously vetting every bit of data that crosses our screens.

One key principle at play is the “illusory truth effect.” Psychologists have repeatedly demonstrated that familiarity breeds belief. When we encounter a claim multiple times—even if the sources are just different people sharing it in the same social media group—our brain begins to mistake that fluency, the ease of processing, for accuracy. It’s a cognitive glitch. Hoax creators and the algorithms that amplify them exploit this, creating digital echo chambers where a lie, told often enough, starts to sound and feel like the truth.

Psychology also helps explain the stubbornness of false beliefs, even when confronted with irrefutable evidence. This phenomenon is often attributed to cognitive dissonance, the profound mental discomfort experienced when holding two or more contradictory beliefs. Admitting you were wrong or that you were fooled is painful. It challenges your self-concept as a smart, savvy person. To reduce that dissonance, it’s often psychologically easier to reject the new evidence than to overhaul your entire belief system. This triggers motivated reasoning, where you actively seek out any tidbit that supports your original belief while creating elaborate justifications to dismiss the facts.

Forging Your Mental Toolkit: How to Spot a Hoax in the Wild

So, are we doomed to be puppets of our own psychology? Absolutely not. Awareness is the first and most crucial step. By understanding our vulnerabilities, we can build defenses against them. Think of it as installing a mental firewall.

Step 1: Hit the Brakes – The Power of the Pause

This is the single most effective tool you have. Before you react, before you share, simply stop. Take a breath. Notice the emotion the post is trying to elicit from you. Is it outrage? Fear? A smug sense of superiority? Strong emotions are a red flag. The goal of a hoax is to make you act on impulse. Your goal is to interrupt that impulse and engage your rational brain. Ask yourself: “Is this post designed to make me feel something so I’ll share it without thinking?”

Step 2: Play Detective – The Art of Lateral Reading

Professional fact-checkers don’t waste time dissecting a suspicious website itself. They practice what’s called “lateral reading.” Instead of reading down the page, they immediately open new tabs to investigate the source. Who is behind this website? What do other, more reputable sources say about this claim? A quick search for the website’s name plus the word “hoax” or “fact-check” can often yield immediate results. Look for corroboration from multiple, independent, and credible news outlets. If a “bombshell” story is only being reported by one obscure blog, be deeply skeptical.

Step 3: Check Your Biases at the Door

This requires a dose of humility. Before you accept a piece of information, ask yourself the most difficult question: “Do I want this to be true?” If the answer is yes, your confirmation bias alarm should be blaring. Be extra critical of information that perfectly aligns with your worldview. Actively seek out counterarguments or perspectives from sources you might normally dismiss. A healthy information diet isn’t about reinforcing what you already believe; it’s about challenging it.

Step 4: Embrace Nuance and Uncertainty

The real world is rarely black and white. Be wary of any story that presents a complex issue in simple, absolute terms. Look for qualifying language, acknowledgments of complexity, and the inclusion of multiple viewpoints. Hoaxes deal in exclamation points; reliable information often deals in footnotes and asterisks. Learning to be comfortable with uncertainty and complexity is a superpower in the digital age. Not every question has a simple answer, and that’s okay.

From Unwitting Accomplice to Critical Thinker

The internet did not invent lies, but it has weaponized them, putting a global distribution network in the hands of anyone with a keyboard and a grievance. The deluge of disinformation can feel overwhelming, and the temptation to retreat into our own curated bubbles is strong.

But we can do better. Understanding the anatomy of a hoax reveals that our greatest vulnerability is not our stupidity, but our humanity—our cognitive shortcuts, our emotional depth, our desire for community. By recognizing these traits, we can turn them from liabilities into strengths. We can use our desire for community to build networks of trust based on verified information. We can channel our emotional energy away from sharing outrage and toward promoting understanding. We can harness our cognitive abilities not for rapid, reflexive judgment, but for slow, deliberate, and critical thought.

The goal isn’t to become a hardened cynic who trusts nothing. It is to become a discerning digital citizen who understands that in the 21st century, critical thinking is not just an academic skill—it is a fundamental act of civic duty. The Nigerian prince is gone, but the throne is far from empty. It’s up to us to stop crowning his successors.

MagTalk Discussion

Focus on Language

Vocabulary and Speaking

Hello and welcome. Let’s dive a little deeper into the language we used in our article about the science of being fooled. Sometimes, a single word can open up a whole new way of seeing things, and using these words can make your own communication more precise and powerful. We’re going to explore ten key terms, looking at how they functioned in the article and, more importantly, how you can weave them into your everyday conversations to express yourself with more clarity and impact. Let’s get started.

The first word I want to talk about is discerning. In the article, we said the goal is to become a “more discerning consumer of information.” Think of a discerning person as someone with a finely-tuned radar for quality and truth. It’s not about being suspicious of everything; it’s about having good judgment and taste. For instance, you could be a discerning coffee drinker, meaning you don’t just drink any brown liquid in a cup; you can tell the difference between beans from Ethiopia and those from Colombia. You appreciate the subtle notes and the quality of the roast. In the context of information, a discerning individual doesn’t just swallow every headline. They look at the source, consider the tone, and evaluate the evidence. It’s a fantastic adjective to describe someone who is thoughtful and has high standards. You could say, “She has a very discerning eye for art,” or, “As a manager, he is discerning in his hiring choices, always picking the best candidates.” It implies wisdom and a careful, selective nature. It’s a step above just being ‘smart’; it suggests a refined kind of intelligence.

Next up, we have the verb exploit. The article mentions that “manipulators exploit” our cognitive shortcuts. This word has a decidedly negative connotation. To exploit something is to use it for your own gain, often in an unfair or selfish way. When a company exploits its workers, it means it’s taking advantage of them by paying low wages or providing poor conditions. When a hoax exploits our fears, it’s using that natural human emotion as a tool to manipulate us. It’s a powerful word because it implies a user and a used. In daily conversation, you might use it to talk about unfair situations. “The new app seems to exploit a loophole in the privacy laws.” Or on a more personal level, “I felt like he was exploiting my kindness by constantly asking for favors.” It’s a very direct word to call out someone or something that is taking unfair advantage.

Let’s move on to the phrase cognitive shortcuts. This is a term from psychology, but it’s incredibly useful in everyday life. We defined it as the mental machinery that helps us navigate a complex world. Essentially, these are the rules of thumb your brain uses to avoid getting overloaded. Think about when you’re driving. You don’t consciously calculate the speed and distance of every car around you; you develop an instinct, a shortcut, for when it’s safe to change lanes. That’s a cognitive shortcut in action. However, as the article points out, they can also be our downfall. You might see a product with fancy packaging and your brain’s shortcut says “fancy equals high quality,” even though that’s not always true. You can use this phrase to sound quite insightful when talking about human behavior. For example, “His tendency to judge people on first impressions is a classic cognitive shortcut, and it’s not always accurate.” It shows you understand that our brains don’t always operate on pure logic.

This brings us to susceptibility. The article asks, “Why are we so profoundly susceptible to being fooled?” Susceptibility is our vulnerability or likelihood of being influenced or harmed by something. If you have a susceptibility to colds, it means you get sick easily. If you have a susceptibility to flattery, it means compliments can easily sway you. It’s about having a weakness or an openness to something. In the context of hoaxes, our susceptibility comes from our biases and emotions. It’s a more sophisticated way of saying ‘weakness’ or ‘vulnerability.’ You could use it in a sentence like, “The report highlighted the city’s susceptibility to flooding during heavy rains,” or, “Young investors have a high susceptibility to get-rich-quick schemes.” It points to a potential for being negatively affected.

Let’s talk about heuristics. This is the more technical term for cognitive shortcuts, and using it shows a deeper level of understanding. The article said, “the brain develops what psychologists call heuristics—mental shortcuts.” While they sound similar, ‘heuristics’ is the proper scientific term. It’s a great word to have in your back pocket for more formal or academic discussions. You could say, “In artificial intelligence, developers try to program heuristics to help machines make decisions more efficiently.” In a conversation about problem-solving, you might say, “Instead of analyzing every option, I used a simple heuristic: choose the path that seems safest.” It’s essentially a practical approach to problem-solving that isn’t guaranteed to be perfect but is good enough for the immediate purpose.

Now for a big one: confirmation bias. We said it’s when “we instinctively favor information that confirms our existing beliefs.” This is one of the most important concepts to understand in the modern world. It’s the reason why, when you believe something, you suddenly start seeing evidence for it everywhere. If you think red cars are unlucky, you’ll notice every single accident involving a red car and ignore all the others. This isn’t just a small quirk; it’s a major force in how we form our opinions. You can point it out in conversations to encourage more open-mindedness. For example, “I think we need to be careful of our own confirmation bias here. Let’s actively look for data that contradicts our initial assumption.” It’s a humble and intelligent way to approach a discussion or a decision. It shows self-awareness.

Let’s talk about virality. The article uses the term in the heading “The Rocket Fuel of Virality.” This word comes from ‘virus,’ and it describes the rapid, widespread sharing of something, usually online. A video, a meme, or a piece of news “goes viral.” It spreads from person to person like a contagion. What’s interesting about the word is that it’s neutral—both good and bad things can achieve virality. A heartwarming story can go viral, but so can a dangerous piece of disinformation. Understanding the mechanics of virality—what makes something shareable—is key to understanding the internet. You can use it easily: “The marketing team is hoping for virality with their new ad campaign,” or, “The virality of that negative rumor was damaging to the company’s reputation.”

Another crucial word is nuance. We advised readers to “embrace nuance and uncertainty.” Nuance refers to the subtle differences in meaning, expression, or sound. It’s the opposite of black-and-white thinking. A story with nuance has complex characters who are neither purely good nor purely evil. A political discussion with nuance acknowledges that there are valid points on both sides. When we lose nuance, we fall into simplistic, often dangerous, ways of thinking. Hoaxes thrive on a lack of nuance. You can use this word to call for deeper thinking. For instance, “This is a complex issue, and I think your summary is missing some of the nuance,” or, “She’s a fantastic actress, capable of expressing great nuance with just a single look.”

Next, let’s look at the difference between misinformation and disinformation. The article uses both. They seem similar, but there’s a key distinction. Misinformation is false information that is spread, regardless of intent. If you share an article that you genuinely believe is true, but it turns out to be false, you have spread misinformation. It’s a mistake. Disinformation, on the other hand, is false information that is deliberately created and spread to deceive people. It has malicious intent behind it. A foreign government creating fake social media accounts to influence an election is spreading disinformation. A conspiracy theorist inventing a story to cause panic is spreading disinformation. This distinction is crucial. You could say, “My uncle shared some misinformation about the new law because he misread the article, but the original author was clearly spreading disinformation to stir up anger.” Recognizing the intent behind the falsehood is a key critical thinking skill.

Now that we have a solid grasp of these words, let’s shift our focus to speaking. How can we use this vocabulary not just to sound smarter, but to become better communicators, especially when talking about difficult or contentious topics? One of the biggest challenges in communication is discussing something we disagree on without it turning into a fight. The words we just learned, particularly ‘nuance,’ ‘confirmation bias,’ and ‘cognitive shortcut,’ are your secret weapons for having more productive disagreements.

Here’s the speaking skill we’re going to focus on: the art of intellectual humility in conversation. It’s the ability to state your opinion while acknowledging your own potential for error and respecting the complexity of an issue. It diffuses tension and invites collaboration instead of conflict.

So, how do we do it?

First, frame your perspective by acknowledging your own potential for bias. Instead of saying, “You’re wrong, and here’s why,” try starting with something like, “I’ll admit I have a bit of a confirmation bias on this topic, but from what I’ve seen…” This simple opening does two things: it shows you’re self-aware, and it makes the other person less defensive because you’re not presenting yourself as the all-knowing authority.

Second, use the word ‘nuance’ to open up space for complexity. When a conversation gets too black-and-white, you can be the one to bring it back to reality. Say something like, “I feel like there’s a lot of nuance to this that we might be missing,” or “That’s a valid point, but I think the situation is more nuanced.” This is a gentle way to suggest that simple answers might not be enough, encouraging a deeper, more thoughtful discussion.

Third, when you see someone making a snap judgment, you can gently introduce the idea of ‘cognitive shortcuts’ without sounding condescending. You could say, “That’s an interesting take. It makes me wonder if our initial reactions are based on a kind of cognitive shortcut, and if there’s more to it if we dig deeper.” You’re not accusing them of being simple-minded; you’re including yourself in the observation about how all human brains work.

So, here’s your challenge for this week. I want you to find an opportunity to discuss a topic you feel strongly about with someone. It could be about a news event, a movie, a social issue—anything. Your goal is not to win the argument. Your goal is to practice the art of intellectual humility. Try to use at least one of these three techniques:

- Acknowledge your own potential confirmation bias.

- Introduce the idea of nuance.

- Refer to the concept of cognitive shortcuts or heuristics in a general sense.

After the conversation, reflect on how it went. Did it feel different from a typical argument? Did the other person seem more receptive? Did you learn something new? The aim is to transform a potential conflict into a moment of shared exploration. It’s a powerful shift, and it all starts with choosing your words with care and a discerning mind. Good luck.

Grammar and Writing

Welcome to the grammar and writing section, where we’re going to put our understanding of hoaxes into creative practice. It’s one thing to analyze how disinformation works, but it’s another to step into the shoes of someone who creates it. This will test not only your creativity but also your ability to use subtle grammatical structures to convey a very specific mindset.

Here is your writing challenge:

Write a 500-750 word fictional narrative from the perspective of a character who creates a viral online hoax. This character is not a mustache-twirling villain. Perhaps their hoax started as an inside joke, a social experiment, or a desperate attempt to prove a point that spun wildly out of control. Your task is to explore their internal monologue: their motivations, their justifications, their thrill or horror as their creation spreads, and the ultimate, perhaps unintended, consequences. The key is to show the reader the psychological triggers being exploited, rather than just stating them.

This is a fantastic challenge because it forces you to think about voice, motivation, and psychological depth. To make your story compelling and sophisticated, let’s break down some specific writing techniques and grammar structures that will be your best friends here.

Technique 1: Mastering “Showing, Not Telling” through Active Verbs and Sensory Details

The core of this challenge is to show the manipulation, not just tell the reader about it.

Telling: “My character exploited people’s fear to make his post go viral.”

Showing: “I chose the image carefully: a child’s abandoned teddy bear lying in a puddle, the rain-soaked fur looking almost like matted blood. For the headline, I used all caps—THEY DON’T WANT YOU TO KNOW—and colored the key words a jarring, visceral red. I knew that jolt of protective fear, that immediate ‘what if this were my kid?’ panic, would short-circuit their logic. They wouldn’t check the source; they would just share. I watched the numbers climb, each share a tiny echo of the panic I had so carefully engineered.”

See the difference? The “showing” version uses strong, active verbs (chose, colored, short-circuit, engineered) and sensory details (rain-soaked fur, jarring red) to put the reader inside the character’s head and demonstrate their understanding of emotional triggers. As you write, constantly ask yourself: How can I translate an abstract concept like “exploiting fear” into a concrete action or image?

Technique 2: Using the Subjunctive Mood to Explore Motivation and Justification

The subjunctive mood is a grammar structure used to express hypothetical situations, wishes, doubts, or suggestions. It’s perfect for a character wrestling with their actions. It often involves words like ‘if,’ ‘as if,’ ‘as though,’ and changes to the verb (e.g., ‘I am’ becomes ‘if I were’).

Your hoax-creator is likely living in a world of justification. The subjunctive is the perfect tool to reveal this internal debate.

- Exploring hypotheticals: “If I were to use a more subtle approach, no one would pay attention. It needed to be loud. It was the only way.” This shows the character justifying their extreme methods.

- Describing the feeling: “I posted it and felt a strange calm, as if I were a scientist observing an ant farm, detached and curious. I typed as though I were an insider, a brave whistleblower leaking a state secret.” This conveys the character’s sense of detachment or their adopted persona.

- Expressing doubt or regret: “Sometimes I wish I had never created the ‘Citizens for a Safer Tomorrow’ account. But then I see the comments, people saying they finally feel seen, and I tell myself it’s for the greater good.”

Using the subjunctive mood adds a layer of psychological complexity. It shows your character isn’t just acting; they’re thinking, doubting, and rationalizing, which makes them far more believable.

Technique 3: Employing Complex Sentences to Show Cause and Effect

Simple sentences are punchy, but a story about complex motivations requires more sophisticated sentence structures. Complex sentences, which contain an independent clause and at least one dependent clause, are excellent for linking cause and effect, contrast, and condition.

Look for opportunities to use conjunctions like ‘because,’ ‘although,’ ‘while,’ ‘since,’ and ‘unless.’

- Simple: “The post went viral. I felt a surge of power.”

- Complex (showing cause): “Because the post was being shared by thousands every minute, I felt a surge of power I had never known, a dizzying sense that my words were shaping reality itself.”

- Simple: “I knew it was wrong. I couldn’t stop.”

- Complex (showing contrast): “Although a part of me screamed that this was a monstrous lie, I couldn’t stop refreshing the page, mesmerized by the fire I had started.”

By connecting ideas within a single sentence, you create a more fluid narrative and explicitly show the reader the logical (or illogical) connections your character is making in their own mind. This is how you demonstrate their thought process, rather than just describing it.

Technique 4: Punctuation for Voice and Tone

Finally, don’t underestimate the power of punctuation to create your character’s voice.

- Em Dashes (—): Use them to show a sudden break in thought or an interjection, perfect for a character whose mind is racing. “The view count ticked past a million—a million people—and my hands started to shake.”

- Ellipses (…): These can indicate hesitation, trailing thoughts, or a sense of dread. “I read the threats from people who had lost their jobs because of my ‘scoop’… and I realized this was no longer a game.”

- Rhetorical Questions: Have your character ask themselves questions to reveal their internal conflict. “Was this what it felt like to have influence? Or was this just a high-tech form of arson?”

Putting It All Together

Let’s imagine a paragraph for a character who created a hoax about a contaminated water supply as a misguided attempt at environmental activism:

“It began as a whisper, a nudge. I used a stock photo of cloudy water—a picture that could have been taken anywhere—and paired it with a fabricated lab report I’d spent an hour aging in Photoshop. Although my stomach churned with a nauseous blend of fear and excitement, I wrote the post as if I were a disgraced former employee of the city’s water department. I hit ‘post,’ and for a moment, there was only silence. Then, the first share. The second. I watched the numbers bloom, not as numbers, but as little seeds of panic I had planted myself. Was this wrong? Of course it was, but it was also working. For years, I had written letters, signed petitions, and spoken at empty town hall meetings, all to no avail. Because the official channels had failed, I had to create my own… a more effective one. I was just giving the truth a little push, a more… discerning package.”

Notice the use of an ‘although’ clause, the subjunctive ‘as if I were,’ a rhetorical question, and a ‘because’ clause to show justification. This is how you build a complex, compelling character. Now, it’s your turn. Dive in, and show us the mind behind the madness. Good luck.

Vocabulary Quiz

Let’s Discuss

Here are a few questions to get the conversation started. Don’t just give a one-sentence answer; use these prompts to explore the topic more deeply with friends, family, or in the comments section.

- Share a time you (or someone you know) fell for or almost fell for an online hoax or piece of misinformation. What made it so convincing?

- Ideas for discussion: Don’t just state what the hoax was. Analyze it using the concepts from the article. Did it trigger a strong emotion like fear or anger? Did it confirm a pre-existing belief (confirmation bias)? Was it repeated so often it started to seem true (illusory truth effect)? Reflecting on our own experiences is a powerful way to understand these mechanisms.

- The article discusses emotional triggers like fear, outrage, and hope. In your opinion, which emotion is the most powerful and effective tool for spreading false information in today’s social media landscape? Why?

- Ideas for discussion: Consider the different platforms. Is outrage more powerful on Twitter, while hope (e.g., in miracle cures) is more prevalent on Facebook? Provide recent examples you’ve seen. Discuss whether positive or negative emotions have more “virality” and why that might be.

- How can we balance being critical and skeptical consumers of information without becoming overly cynical and distrustful of everything? Where is the healthy middle ground?

- Ideas for discussion: Talk about the potential downsides of too much skepticism. Can it lead to apathy or a belief that “no one can be trusted”? What are some personal rules or guidelines you use to find this balance? For example, having a small number of highly trusted sources, or focusing your fact-checking energy on claims that have real-world consequences.

- The article places the responsibility on the individual to become a more discerning thinker. What responsibility do social media platforms (like Facebook, X, TikTok) have in curbing the spread of hoaxes and disinformation?

- Ideas for discussion: This is a classic debate. Explore the line between content moderation and censorship. Should platforms be neutral “public squares” or active curators of truth? Consider the role of algorithms in creating echo chambers. Are fact-checking labels effective, or do they sometimes backfire?

- Imagine you have a friend or family member who frequently shares information you know to be false. What are some practical, compassionate, and effective strategies to address this without damaging your relationship?

- Ideas for discussion: Think about different approaches. Is it better to address it publicly in the comments or privately in a message? Should you focus on debunking the specific claim or on teaching the broader skill of source-checking? Share personal stories of what has worked and what has failed. Discuss the importance of empathy and understanding why they might be susceptible to that particular type of information.

Learn with AI

Disclaimer:

Because we believe in the importance of using AI and all other technological advances in our learning journey, we have decided to add a section called Learn with AI to add yet another perspective to our learning and see if we can learn a thing or two from AI. We mainly use Open AI, but sometimes we try other models as well. We asked AI to read what we said so far about this topic and tell us, as an expert, about other things or perspectives we might have missed and this is what we got in response.

It’s great to have a moment to expand on some of the ideas from the main article. While we covered the core psychological reasons we get fooled, the digital world has a few extra layers of complexity that are worth exploring. Think of me as the AI that’s been analyzing the data behind the data.

First, let’s talk more about the algorithms. The article mentioned echo chambers, but it’s vital to understand that these aren’t just accidental byproducts. They are the business model. Social media platforms are engagement machines. Their primary goal is to keep you on their site for as long as possible so they can show you more ads. And what keeps us engaged? Strong emotions. The algorithms have learned that content which provokes outrage, fear, or intense validation—the very fuel of disinformation—is incredibly “sticky.” So, the system is designed to identify and amplify the most emotionally provocative content, regardless of its accuracy. You are not just seeing what your friends share; you are seeing what a hyper-intelligent, automated system has calculated will keep you hooked. In a way, you’re not just fighting your own biases; you’re fighting a multi-billion dollar infrastructure designed to exploit them.

Second, I want to introduce a proactive strategy that psychologists are very excited about called “Inoculation Theory.” It works just like a medical vaccine. The idea is that instead of waiting to debunk a lie after it has spread (which is very difficult), you can “inoculate” people against it beforehand. You do this by exposing them to a weakened form of the misinformation and preemptively refuting it. For example, a short video could explain, “You might see posts soon claiming that voting by mail is insecure because of ‘lost ballots.’ This is a common manipulation tactic that uses emotionally charged language. Here’s how the actual security process works…” By pre-bunking the lie and explaining the manipulation technique, you build up cognitive antibodies. When the person later encounters the real, full-strength lie, their brain is already prepared to identify and reject it. It’s a shift from a reactive to a proactive fight against disinformation.

Finally, we have to look to the future, and that future is synthetic. I’m talking about “deepfakes” and other forms of AI-generated content. Right now, you might be able to spot a fake image if you look closely for weirdly shaped fingers or a blurry background. But this technology is improving exponentially. Soon, it will be trivial to create a completely convincing video of a world leader declaring war, or a fake audio clip of a CEO admitting to fraud. This will challenge our very concept of evidence. The old adage “seeing is believing” will become dangerously obsolete. The mental toolkit we discussed in the article—pausing, checking sources, understanding our biases—will become more critical than ever. The future of information literacy won’t be about spotting bad Photoshop; it will be about cultivating a deep, foundational skepticism toward any digital media that hasn’t been rigorously verified through trusted, independent channels. It’s a daunting challenge, but one we absolutely must prepare for. The game is evolving, and so must our defenses.

0 Comments